Strong website performance is no longer a “nice to have” metric — it’s a critical part of your user experience.

Slow loading times and laggy pages tank conversion rates. They serve up a negative first impression of your brand and can push even your most loyal customers to greener pastures.

When we found out our chat widget had started negatively impacting our customers’ Google Lighthouse scores — an important performance metric — we immediately started searching for a solution.

Live chat is a notoriously resource-intensive category, but we were able to cut our entry point bundle in half using the process I lay out in this article. As a result, we reduced the Lighthouse score impact to just one point, compared with a control.

Here’s what we’ll cover:

- Live chat widgets: form and function

- Initial negative impact of our chat widget

- Analysis and bundle reorganization

- Results and impact of the bundle reorganization

- Preventing future regression

Form and function of live chat widgets

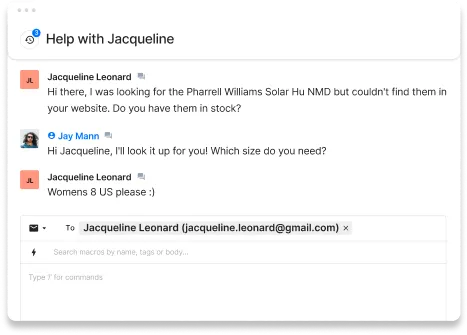

Chat widgets are small apps that allow visitors to get quicker results without leaving the webpage they’re on. The chat window usually sits in the bottom corner of the screen, when open.

Here is an example:

Live chat is especially helpful on ecommerce websites, because retail shoppers expect quicker responses. Repetitive questions involving order status, return policies, and similar situations are easily resolved in chat, and it can also provide a starting point for more complex inquiries.

Because merchants make up the bulk of our customers at Gorgias, our live chat feature is a major part of our product offering.

Our live chat feature is a regular React Redux application rendered in an iframe. It may appear simple and limited, but its features extend beyond simple chat to include campaigns, a self-service portal and widget API.

We implemented code-splitting from the beginning to reduce bundle size, leaving us with the following chunks:

- An entry point chunk, which contained React, Redux and other essential modules

- A chat window chunk

- A chunk with a phone number input component

Unfortunately, that initial action wasn’t enough to prevent performance issues.

Initial negative impact of our chat widget

We started hearing from merchants that the chat widget was impacting their Google Lighthouse scores, essentially decreasing page performance. As I previously mentioned, chat widgets generally have a bad reputation in this regard. But we were seeing unacceptable drops of 15 points or more.

To put those 15 points in context, here are the Google Lighthouse ranges:

- 0 to 49 - Poor

- 50 to 89 - Needs improvement

- 90 to 100 - Good

So if you had a website with 95 performance points, it was considered to be “good” by Lighthouse, but the chat could take it down to “needs improvement”.

Of course, we immediately set out to find and fix the issue.

Analysis and bundle reorganization

There were several potential causes for these performance issues. To diagnose them and test potential solutions, we prioritized the possible problem areas and worked our way down the list. We also kept an open mind and looked in other areas, which allowed us to find some fixes we didn’t initially expect.

The initial entrypoint file was 195kB gzipped and the entire bundle was 343kB gzipped. By the end, we had reduced those numbers to 109kB and 308kB respectively.

Here’s what we found.

Checking for unnecessary rendered DOM elements

First, we opened a test shop with chat installed and tried to find something unusual.

It didn’t take long: The chat window chunk was loaded and the corresponding component was rendered, even if you didn't interact with the chat. It wasn't visible, because the main iframe element had a display: none property set.

Then, we moved to the Profiler tab, where we found that the browser was using a lot of CPU, as reported:

Here's what happens if you defer rendering of this component, as originally intended:

However, this deferral introduced another issue. After clicking the button to open the chat, this window starts to appear with some delay. It's easy to explain: Previously, the JS chunk with this component was downloaded and executed immediately, while these changes caused the chunk to load only after interaction.

This problem is easily fixable by using resource hints. These special HTML tags tell your browser to proactively make connections or download content before the browser normally would. We needed a resource hint called prefetch, which asks the browser to download and cache a resource with a low priority.

It looks like this:

There's a similar resource hint called preload which basically does the same thing, but with higher priority. We chose prefetch, because chat assets are not as important as the resources of the main site.

Since we're using webpack to bundle the app, it's very easy to add this tag dynamically. We just added a special comment inside dynamic import, so it looked like this:

Though this solution didn’t affect bundle size, it significantly increased the performance score by only loading the chat when necessary.

Analyzing bundle size

Once the rendering was working as intended, we started to search for opportunities to reduce the bundle size.

Bundle size doesn’t always affect performance. For example, here you can see almost the same amount of JS, although execution times are very different:

In most cases, however, there is a correlation between bundle size and the performance. It takes the browser longer to parse and execute the additional lines of code in larger bundle sizes.

This is especially true if the app is bundled via webpack, which wraps each module with a function to execute. This isn’t a problem with just a couple of modules, but it can add up — especially once you start getting up into the hundreds.

We used a few tools to find opportunities to reduce bundle size.

The webpack-bundle-analyzer plugin created an interactive treemap, visualizing the content in all bundles

The Coverage tab inside Google Chrome DevTools helped us see which lines were loaded, but not used. The minified code made it more difficult to use, but it was still insightful.

Checking that tree-shaking is working properly

Next, we discovered the client bundle included the yup validation library, which was unexpected. We use this library on the backend, but it’s not a part of the widget.

It turns out the intended tree-shaking didn't work in this situation — we had a shared file which was used by the JS client and backend. It contained a type declaration and validation object, and for some reason webpack didn't eliminate the second one.

After moving type declaration to its own file, bundle size was reduced dramatically - 48kB gzipped

Lazy loading big libraries

We also discovered the Segment analytics SDK took 37.8 kB gzipped.

Since we don't use this SDK on initial load, we created a separate chunk for this library and started to load it only when it's needed.

Separating certain libraries out of the main chunk

By looking into the chart from webpack-bundle-analyzer, we realized that it was possible to move React Router's code from the main chunk to the chunk with the chat window component. It reduced entrypoint size by 3.7kB and removed unnecessary render cycles, according to React Profiler.

We also found that the Day.js library was included in the entrypoint chunk, which we found odd. We actively use this library inside the Chat Window component, so we expected to see this library only inside the chunk related to this component.

In one of the initialization methods, we found usage of utc() and isBefore() from this library, functionality that is already present in native Date API. To parse date string in ISO format you can run new Date() and for comparison just add the < sign. By rewriting this code, we were able to reduce entrypoint size by 6.67kB gzipped. Not a lot, but it’s all starting to add up.

Finding alternatives for big libraries

Another offender was the official client of Sentry (23.4kB gzip). It is a known issue which has not been resolved yet.

One option is to lazy load this SDK. But in this case, there was a risk that we could miss errors occurring before the SDK fully loaded. We followed another approach, using an alternative called micro-sentry. It’s only 2kB and covered all functionality that we needed.

We also tried to replace React with Preact, which worked really well and decreased the bundle size by 33kB in gzip. However, we couldn't find a big difference in the final performance score.

After further discussion with the team, we decided not to use it for now. We think the React team could introduce some interesting features in new versions (for example, concurrent mode looks very promising), while it would take some time for the Preact team to adopt it there. It happened before with hooks: The stable Preact version of the React feature followed a full year later.

Finding more compression opportunities

From further inspection, we found the mp3 file used for the notification sound could be compressed using FFmpeg without a noticeable difference in sound, saving 17.5kB gzipped.

We also found that we used a TTF format for font files, which is not a compression format. We converted them to WOFF2 and WOFF formats, which reduced size by 23kb in gzip for each font file — 115kB in total.

We didn't notice any differences in performance score after these changes, but it was not a redundant exercise. With these changes, we transfer less information, using less network resources. This could be beneficial for customers with bad network connection.

Delivering chat assets from the browser cache

We already used a content delivery network (CDN) to improve the loading time, but we were able to reconfigure its cache policies to make it more efficient. Instead of downloading chat every time user visits the page, chat is downloaded via network only on a first visit, while all subsequent requests will use a version from the browser cache.

A CDN is a very good way to deliver assets to clients, because CDN providers store a cached version of chat application assets in multiple geographical locations. Then, these assets are served based on visitor's location. For example, when someone in London accesses the website with our chat, chat assets are downloaded from a server in the United Kingdom.

Results and impact of the bundle reorganization

Below, you can see how the bundle composition changed after applying the fixes we’ve mentioned. The entrypoint file was halved in size, and the total amount of JS was reduced by 35kB gzipped.

And here’s the full chart inclusive of all chat assets, including the static assets.

To see the impact of these reductions, we performed Google Lighthouse audits on our Shopify test store using three configurations:

- Without chat (as a control)

- With unoptimized chat

- With optimized chat.

We also used the mobile preset to tighten up the conditions. In this mode Lighthouse simulates mobile network and applies CPU throttling.

Here are the results:

- Without any chat, the performance score was around 97-98 points

- With unoptimized chat, the score dropped to around 83-85 points

- With optimized chat, the score jumped back up to around 96-97 points

Not only did we improve on the original penalties, but we were able to get the performance score almost to the same level as when there is no chat enabled at all.

This is either in line with, or outperforming most other chat widgets we have analyzed.

Preventing future regression

To maintain the current levels of performance and impact, we added a size-limit check to our continuous integration pipeline. When you open a pull request, our CI server builds the bundle, measures its size and raises an error if it exceeds the defined limit.

When you import a function, it’s not always obvious what kind of code would be added under the hood — sometimes it's just a few bytes of code, but other times it could import a large library.

This new step makes it possible to detect these regressions in a timely manner.

It's also possible to define a time limit using this tool. In this case, the tool runs a headless version of Chrome to track the time a browser takes to compile and execute your JS.

While it sounds nice, in theory, we found results from this method very unstable. There's an open issue with a suggestion on how to make measurements more stable, so hopefully we can take advantage of the time limit functionality in the future.

Think about performance before it becomes an issue

It turns out there is a lot of low-hanging fruit when it comes to performance optimization.

Just by using built-in developer tools in the browser and a plugin to generate a visual representation of the bundle, you might find a lot of opportunities to optimize performance without refactoring the whole codebase. In our case, we reduced entrypoint file size by 49% and reduced impact on the client's website significantly.

If you work on a new project, we strongly advise you to think about performance before it's too late. You can prevent the accumulation of technical debt by taking simple steps like checking bundlephobia before installing a library, adding size-limit to your build pipeline and running Lighthouse audits from time to time.