As we all locked down in March 2020 and changed our shopping habits, many brick-and-mortar retailers started their first online storefronts.

Gorgias has benefitted from the resulting ecommerce growth over the past two years, and we have grown the team to accommodate these trends. From 30 employees at the start of 2020, we are now more than 200 on our journey to delivering better customer service.

Our engineering team contributed to much of this hiring, which created some challenges and growing pains. What worked at the beginning with our team of three did not hold up when the team grew to 20 people. And the systems that scaled the team to 20 needed updates to support a team of 50. To continue to grow, we needed to build something more sustainable.

Continuous deployment — and the changes required to support it — presented a major opportunity for reaching toward the scale we aspired to. In this article I’ll explore how we automated and streamlined our process to make our developers’ lives easier and empower faster iteration.

Scaling our deployment process alongside organizational growth

Throughout the last two years of accelerated growth, we’ve identified a few things that we could do to better support our team expansion.

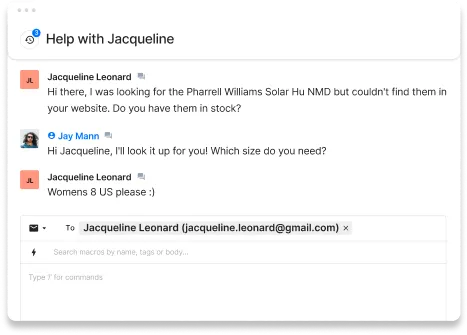

Before optimizing the feature release process, here’s how things went for our earlier, smaller team when deploying new additions:

- Open a pull request (PR) on GitHub, which would run our tests in our continuous integration (CI) system

- Merge those changes into the main branch, once the changes are approved

- Automatically deploy the new commit in the staging/testing environment, after tests run and pass on the main branch

- Deploy these changes in our production environment, assuming all goes well up until this point

- Post on the dedicated Slack channel to inform the team of the new feature, specifying the project deployed and attaching a screenshot of all commits since the last deployment.

- Watch dashboards for any changes — as a failsafe to back up the alerts that were already triggering — to check if the change needed to be rolled back.

This wasn’t perfect, but it was an effective solution for a small team. However, the accelerated growth in the engineering team led to a sharp increase in the number of projects and also collaborators on each project. We began to notice several points of friction:

- The process was slow and painful. The continuous integration and continuous deployment (CI/CD) systems are meant to speed the process up, but we still need to perform rigorous testing. We needed to find the sweet spot between speed and rigorous testing and we believed both aspects left room for improvement.

- Developers didn’t always take full ownership of their changes. When a change wasn’t considered critical (which happened fairly often), a developer would often let the next developer with a critical change deploy multiple commits at the same time. When problems occurred, this made it much more difficult to diagnose the bad commit.

- It was a challenge to track version changes. To track the version of a service that was deployed in production, you had to either check our Kubernetes clusters directly or go through the screenshots in our dedicated Slack channel.

- Each project had its own set of scripts to help with deployment. We wanted to streamline our deployment process and add some consistency across all projects.

It was clear that things needed to change.

Adjusting practices and tools to lay the foundation for implementing GitOps

On the Site Reliability Engineering (SRE) team, we are fans of the GitOps approach, where Git is the single source of truth. So when the previously mentioned points of friction became more critical, we felt that all the tooling involved in GitOps practices could help us find practical solutions.

Additionally, these solutions would often rely on tooling we already had in place (like Kubernetes, or Helm for example).

What is GitOps?

GitOps is an operational framework. It takes application-development best practices and applies them to infrastructure automation.

The main takeaway is that in a GitOps setting, everything from code to infrastructure configuration is versioned in Git. It is then possible to create automation by leveraging the workflows associated with Git.

What are the benefits of implementation?

One such class of that automation could be “operations by pull requests”. In that case, pull requests and associated events could trigger various operations.

Here are some examples:

- Opening a pull request could build an application and deploy it to a preview environment

- You could add a commit to said pull request to rebuild the application and update the container image’s version in the preview environment

- By merging the pull request, you could trigger a workflow that would result in the new changes being deployed in a live production environment

Using ArgoCD as a building block

ArgoCD is a continuous deployment tool that relies on GitOps practices. It helps synchronize live environments and services to version-controlled declarative service definitions and configurations, which ArgoCD calls Applications.

In simpler terms, an Application resource tells ArgoCD to look at a Git repository and to make sure the deployed service’s configuration matches the one stored in Git.

The goal wasn’t to reinvent the wheel when implementing continuous deployment. We instead wanted to approach it in a progressive manner. This would help build developer buy-in, lay the groundwork for a smoother transition, and reduce the risk of breaking deploys. ArgoCD was an excellent step toward those goals, given how flexible it is with customizable Config Management Plugins (CMP).

ArgoCD can track a branch to keep everything up to date with the last commit, but can also make sure a particular revision is used. We decided to use the latter approach as an intermediate step, because we weren’t quite ready to deploy off the HEAD of our repositories.

The only difference from a pipeline perspective is that it now updates the tracked revision in ArgoCD instead of running our complex deployment scripts. ArgoCD has a Command Line Interface (CLI) that allows us to simply do that. Our deployment jobs only need to run the following command:

The developers’ workflow is left untouched at this point. Now comes the fun part.

Building automation into our process to move faster

Our biggest requirement for continuous deployment was to have some sort of safeguard in case things went wrong. No matter how much we trust our tests, it is always possible that a bug makes its way to our production environments.

Before implementing Argo Rollouts, we still kept an eye on the system to make sure everything was fine during deployment and took quick action when issues were discovered. But up to that point, this process was carried out manually.

It was time to automate that process, toward the goal of raising our team’s confidence levels when deploying new changes. By providing a safety net, of sorts, we could be sure that things would go according to plan without manually checking it all.

Argo Rollouts can revert changes automatically, when issues arise

Argo Rollouts is a progressive delivery controller. It relies on a Kubernetes controller and set of custom resource definitions (CRD) to provide us with advanced deployment capabilities on top of the ones natively offered by Kubernetes. These include features like:

- Blue/Green, which consists of deploying all the new instances of our application alongside the old version without sending traffic to it at first. We can then run some tests on the new version and flip the switch when we made sure everything was fine. Once no more traffic is sent to the old version, we can tear it down.

- Canary deployments, which allow us to start by only deploying a small number of replicas, using the new version of our software. This way, we’re able to shift a small portion of traffic to the new version. We can do multiple steps here and only shift 1% of the traffic towards the new version at first. Then 10%, 50% or even more depending on what we try to achieve.

- Analyzing new deployments’ performance. Argo Rollouts allows us to automate some checks as we are rolling out a new version of our software. To do that, we describe such checks in an AnalysisTemplate resource, which Argo Rollouts will use to query our metric provider and make sure everything is fine.

- Experiments, which are another resource Argo Rollouts introduces to allow for short-lived experiments such as A/B testing.

- Progressive delivery in Kubernetes clusters by managing the entire rollout process and allowing us to describe the desired steps of a rollout. It allows us to set a weight for a canary deployment (the ratio between pods running the new and the old versions), perform an analysis, or even pause a deployment for a given amount of time or until manual validation.

We were especially interested in the canary and canary analysis features. By shifting only a small portion of traffic to the new version of an application, we can limit the blast radius in case anything is wrong. Performing an analysis allows us to automatically, and periodically, check that our service’s new version is behaving as expected before promoting this canary.

Argo Rollouts is compatible with multiple metric providers including Datadog, which is the tool we use. This allows us to run a Datadog query (or multiple) every few minutes and compare the results with a threshold value we specify.

We can then configure Argo Rollouts to automatically take action, should the threshold(s) be exceeded too often during the analysis. In those cases, Argo Rollouts scales down the canary and scales the previous stable version of our software back to its initial number of replicas.

Each service has its own metrics to monitor, but for starters we added an error rate check for all of our services.

Creating a deployment conductor to simplify configuration and deployment management

Remember when I mentioned replacing complex, project-specific deployment scripts with a single, simple command? That’s not entirely accurate, and requires some additional nuance for a full understanding.

Not only did we need to deploy software on different kinds of environments (staging and production), but also in multiple Kubernetes clusters per environment. For example, the applications composing the Gorgias core platform are deployed across multiple cloud regions all around the world.

ArgoCD and Argo Rollouts might seem to be magic tools, we actually still need some “glue” to make things stick together. Now because of ArgoCD’s application-based mechanisms, we were able to get rid of custom scripts and use this common tool across all projects. This in-house tool was named deployment conductor.

We even went a step further and implemented this tool in a way that accepts simple YAML configuration files. Such files allow us to declare various environments and clusters in which we want each individual project to be deployed.

When deploying a service to an environment, our tool will then go through all clusters listed for that environment.

For each of these, it will look for dedicated values.yaml files in the service’s chart’s directory. This allows developers to change a service’s configuration based on the environment and cluster in which it’s deployed. Typically, they would want to edit the number of replicas for each service depending on the geographical region.

This makes it much easier for developers than having to manage configuration and maintain deployment scripts.

Enabling continuous deployment

This leads us to the end of our journey’s first leg: our first encounter with continuous deployment.

After we migrated all our Kubernetes Deployments to Argo Rollouts, we let our developers get acclimated for the next few weeks.

Our new setup still wasn’t fully optimized, but we felt like it was a big improvement compared to the previous one. And while we could think of many improvements to make things even more reliable before enabling continuous deployment, we decided to get feedback from the team during this period, to iterate more effectively.

Some projects introduced additional technicalities to overcome, but we easily identified a small first batch of projects where we could enable CD. Before deployment, we asked the development team if we were missing anything they needed to be comfortable with automatic deployment of their code in production environments.

With everyone feeling good about where we were at, we removed the manual step in our CI system (GitLab) for jobs deploying to production environments.

Next steps on the path to continuous deployment

We’re still monitoring this closely, but so far we haven’t had any issues. We still plan on enabling continuous deployment on all our projects in the near future, but it will be a work in progress for now.

Here are some ideas for future improvements that anticipate potential roadblocks:

- Some projects still require additional safeguards before continuous deployment. Automating database migrations is one of our biggest challenges. Helm pre-upgrade hooks would allow us to check if a migration is necessary before updating an application and run it when appropriate. But when automating these database migrations, the tricky part is avoiding heavy locks on critical tables.

- It still isn’t that easy to track what version of a service is currently deployed. When things go according to plan, the last commit in the main branch should either be deployed or currently deploying. To solve this, we could go a step further and version the state of each application for each cluster, including the version identifier for the version that should be deployed. We’re also monitoring the Argo image updater repository closely. When a stable version is released, it could help us detect new available versions for services, deploy them, and update the configuration in Git automatically.

- When there are multiple clusters per environment with the same services deployed, we end up with too many ArgoCD applications. One thing we could do is use the “app of apps” pattern and manage a single application to create all the other required applications for a given service.

- On the bigger projects, the volume of activity may require the queuing of deployments. In fact, if two people merge changes in the main branch around the same time, there could be issues. The last thing we want is for the last commit to be deployed and then replaced by the commit preceding it.

We’re excited to explore these challenges. And, overall, our developers have welcomed these changes with open arms. It helps that our systems have been successful at stopping bad deployments from creating big incidents so far.

While we haven’t reached the end of our journey yet, we are confident that we are on the right path, moving at the right pace for our team.