Join us for Moments That Matter: Meet the Modern Helpdesk

September 10 at 12PM EST.

Save your seat

TL;DR:

Your AI sounds like a robot, and your customers can tell.

Sure, the answer is right, but something feels off. The tone of voice is stiff. The phrases are predictable and generic. At most, it sounds copy-pasted. This may not be a big deal from your side of support. In reality, it’s costing you more than you think.

Recent data shows that 45% of U.S. adults find customer service chatbots unfavorable, up from 43% in 2022. As awareness of chatbots has increased, so have negative opinions of them. Only 19% of people say chatbots are helpful or beneficial in addressing their queries. The gap isn't just about capability. It's about trust. When AI sounds impersonal, customers disengage or leave frustrated.

Luckily, you don't need to choose between automation and the human touch.

In this guide, we'll show you six practical ways to train your AI to sound natural, build trust, and deliver the kind of support your customers actually like.

The fastest way to make your AI sound more human is to teach it to sound like you. AI is only as good as the input you give it, so the more detailed your brand voice training, the more natural and on-brand your responses will be.

Start by building a brand voice guide. It doesn't need to be complicated, but it should clearly define how your brand communicates with customers. At minimum, include:

Think of your AI as a character. Samantha Gagliardi, Associate Director of Customer Experience at Rhoback, described their approach as building an AI persona:

"I kind of treat it like breaking down an actor. I used to sing and perform for a living — how would I break down the character of Rhoback? How does Rhoback speak? What age are they? What makes the most sense?"

✅ Create a brand voice guide with tone, style, formality, and example phrases.

Humans associate short pauses with thinking, so when your AI responds too quickly, it instantly feels unnatural.

Adding small delays helps your AI feel more like a real teammate.

Where to add response delays:

Even a one- to two-second pause can make a big difference in a robotic or human-sounding AI.

✅ Add instructions in your AI’s knowledge base to include short response delays during key moments.

Generic phrases make your AI sound like... well, AI. Customers can spot a copy-pasted response immediately — especially when it's overly formal.

That doesn't mean you need to be extremely casual. It means being true to your brand. Whether your voice is professional or conversational, the goal is the same: sound like a real person on your team.

Here's how to replace robotic phrasing with more brand-aligned responses:

|

Generic Phrase |

More Natural Alternative |

|---|---|

|

“We apologize for the inconvenience.” |

“Sorry about that, we’re working on it now.” (friendly) |

|

“Your satisfaction is our top priority.” |

“We want to make sure this works for you.” (friendly) |

|

“Please be advised…” |

“Just a quick heads up…” (friendly) |

|

“Your request has been received.” |

“Got it. Thanks for reaching out.” (friendly) |

|

“I will now review your request.” |

“Let me take a quick look.” (friendly) |

✅ Identify your five most common inquiries and give your AI a rewritten example response for each.

One of the biggest tells that a response is AI-generated? It ignores what's already happened.

When your AI doesn't reference order history or past conversations, customers are forced to repeat themselves. Repetition can lead to frustration and can quickly turn a good customer experience into a bad one.

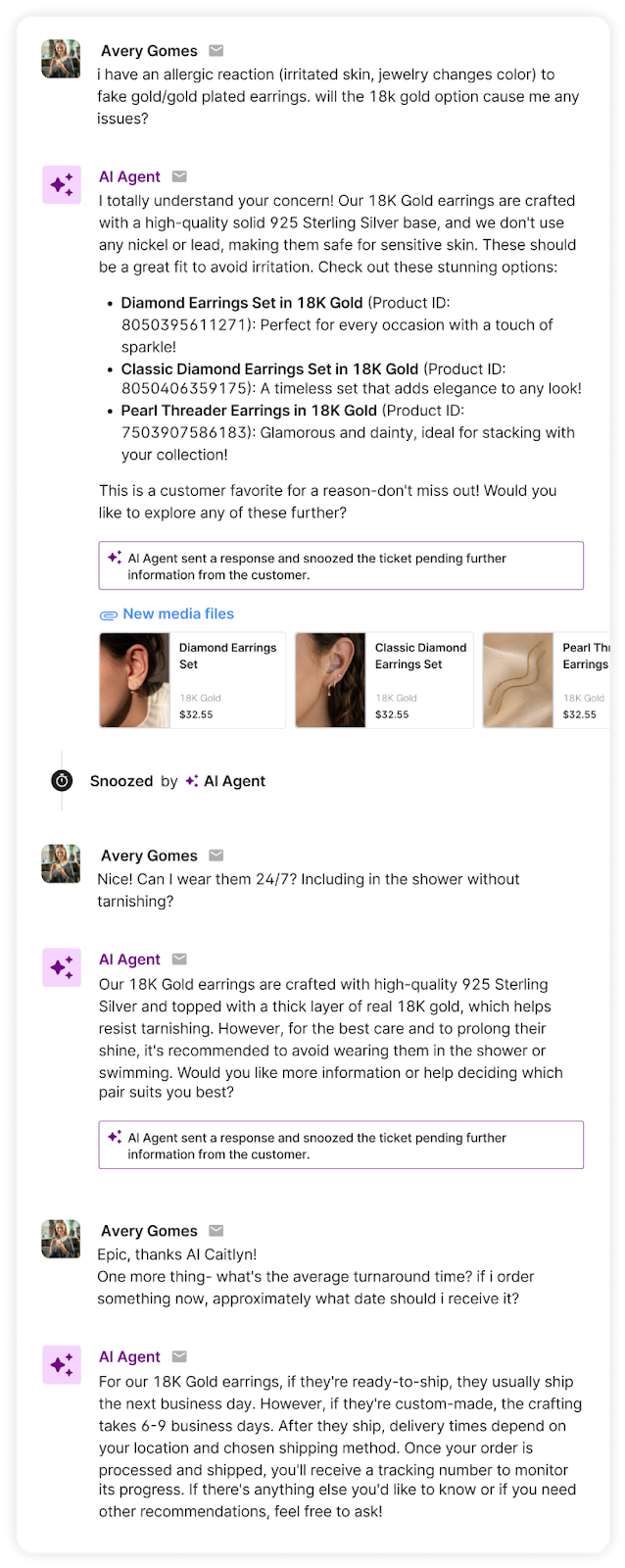

Great AI uses context to craft replies that feel personalized and genuinely helpful.

Here's what good context looks like in AI responses:

Tools like Gorgias AI Agent automatically pull in customer and order data, so replies feel human and contextual without sacrificing speed.

✅ Add instructions that prompt your AI to reference order details and/or past conversations in its replies, so customers feel acknowledged.

Customers just want help. They don't care whether it comes from a human or AI, as long as it's the right help. But if you try to trick them, it backfires fast. AI that pretend to be human often give customers the runaround, especially when the issue is complex or emotional.

A better approach is to be transparent. Solve what you can, and hand off anything else to an agent as needed.

When to disclose that the customer is talking to AI:

For more on this topic, check out our article: Should You Tell Customers They're Talking to AI?

✅ Set clear rules for when your AI should escalate to a human and include handoff messaging that sets expectations and preserves context.

We're giving you permission to break the rules a little bit. The most human-sounding AI doesn't follow perfect grammar or structure. It reflects the messiness of real dialogue.

People don't speak in flawless sentences every time. We pause, rephrase, cut ourselves off, and throw in the occasional emoji or "uh." When AI has an unpredictable cadence, it feels more relatable and, in turn, more human.

What an imperfect AI could look like:

These imperfections give your AI a more believable voice.

✅ Add instructions for your AI that permit variation in grammar, tone, and sentence structure to mimic real human speech.

Human-sounding AI doesn’t require complex prompts or endless fine-tuning. With the right voice guidelines, small tone adjustments, and a few smart instructions, your AI can sound like a real part of your team.

Book a demo of Gorgias AI Agent and see for yourself.

{{lead-magnet-2}}

TL;DR:

You’ve chosen your AI tool and turned it on, hoping you won’t have to answer another WISMO question. But now you’re here. Why is AI going in circles? Why isn’t it answering simple questions? Why does it hand off every conversation to a human agent?

Conversational AI and chatbots thrive on proper training and data. Like any other team member on your customer support team, AI needs guidance. This includes knowledge documents, policies, brand voice guidelines, and escalation rules. So, if your AI has gone rogue, you may have skipped a step.

In this article, we’ll show you the top seven AI issues, why they happen, how to fix them, and the best practices for AI setup.

{{lead-magnet-1}}

AI can only be as accurate as the information you feed it. If your AI is confidently giving customers incorrect answers, it likely has a gap in its knowledge or a lack of guardrails.

Insufficient knowledge can cause AI to pull context from similar topics to create an answer, while the lack of guardrails gives it the green light to compose an answer, correct or not.

How to fix it:

This is one of the most frustrating customer service issues out there. Left unfixed, you risk losing 29% of customers.

If your AI is putting customers through a never-ending loop, it’s time to review your knowledge docs and escalation rules.

How to fix it:

It can be frustrating when AI can’t do the bare minimum, like automate WISMO tickets. This issue is likely due to missing knowledge or overly broad escalation rules.

How to fix it:

One in two customers still prefer talking to a human to an AI, according to Katana. Limiting them to AI-only support could risk a sale or their relationship.

The top live chat apps clearly display options to speak with AI or a human agent. If your tool doesn’t have this, refine your AI-to-human escalation rules.

How to fix it:

If your agents are asking customers to repeat themselves, you’ve already lost momentum. One of the fastest ways to break trust is by making someone explain their issue twice. This happens when AI escalates without passing the conversation history, customer profile, or even a summary of what’s already been attempted.

How to fix it:

Sure, conversational AI has near-perfect grammar, but if its tone is entirely different from your agents’, customers can be put off.

This mismatch usually comes from not settling on an official customer support tone of voice. AI might be pulling from marketing copy. Agents might be winging it. Either way, inconsistency breaks the flow.

How to fix it:

When AI is underperforming, the problem isn’t always the tool. Many teams launch AI without ever mapping out what it's actually supposed to do. So it tries to do everything (and fails), or it does nothing at all.

It’s important to remember that support automation isn’t “set it and forget it.” It needs to know its playing field and boundaries.

How to fix it:

AI should handle |

AI should escalate to a human |

|---|---|

Order tracking (“Where’s my package?”) |

Upset, frustrated, or emotional customers |

Return and refund policy questions |

Billing problems or refund exceptions |

Store hours, shipping rates, and FAQs |

Technical product or troubleshooting issues |

Simple product questions |

Complex or edge‑case product questions |

Password resets |

Multi‑part or multi‑issue requests |

Pre‑sale questions with clear, binary answers |

Anything where a wrong answer risks churn |

Once you’ve addressed the obvious issues, it’s important to build a setup that works reliably. These best practices will help your AI deliver consistently helpful support.

Start by deciding what AI should and shouldn’t handle. Let it take care of repetitive tasks like order tracking, return policies, and product questions. Anything complex or emotionally sensitive should go straight to your team.

Use examples from actual tickets and messages your team handles every day. Help center articles are a good start, but real interactions are what help AI learn how customers actually ask questions.

Create rules that tell your AI when to escalate. These might include customer frustration, low confidence in the answer, or specific phrases like “talk to a person.” The goal is to avoid infinite loops and to hand things off before the experience breaks down.

When a handoff happens, your agents should see everything the AI did. That includes the full conversation, relevant customer data, and any actions it has already attempted. This helps your team respond quickly and avoid repeating what the customer just went through.

An easy way to keep order history, customer data, and conversation history in one place is by using a conversational commerce tool like Gorgias.

A jarring shift in tone between AI and agent makes the experience feel disconnected. Align aspects such as formality, punctuation, and language style so the transition from AI to human feels natural.

Look at recent escalations each week. Identify where the AI struggled or handed off too early or too late. Use those insights to improve training, adjust boundaries, and strengthen your automation flows.

If your AI chatbot isn’t working the way you expected, it’s probably not because the technology is broken. It’s because it hasn’t been given the right rules.

When you set AI up with clear responsibilities, it becomes a powerful extension of your team.

Want to see what it looks like when AI is set up the right way?

Try Gorgias AI Agent. It’s conversational AI built with smart automation, clean escalations, and ecommerce data in its core — so your customers get faster answers and your agents stay focused.

The best in CX and ecommerce, right to your inbox

TL;DR:

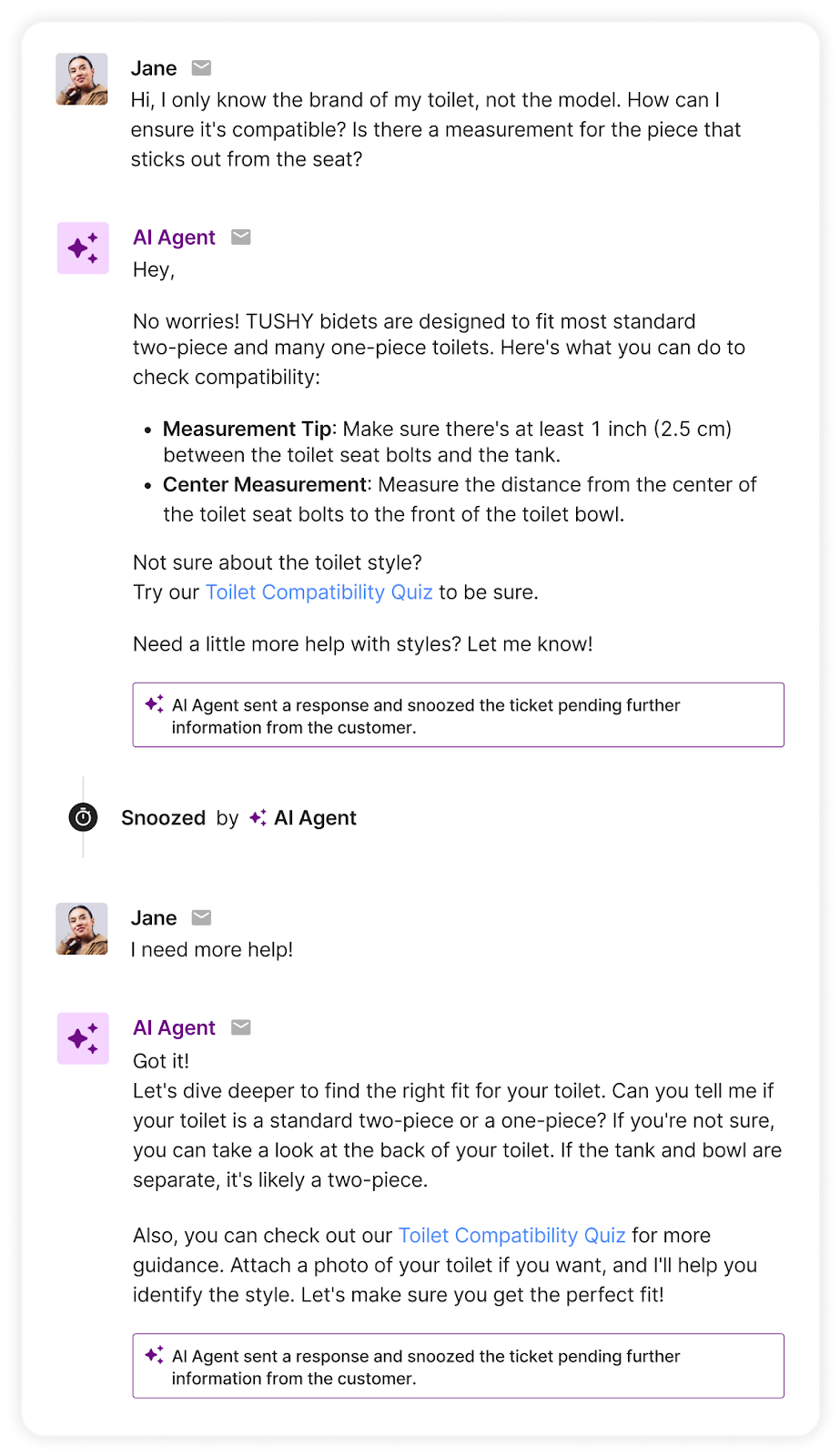

Customer education has become a critical factor in converting browsers into buyers. For wellness brands like Cornbread Hemp, where customers need to understand ingredients, dosages, and benefits before making a purchase, education has a direct impact on sales. The challenge is scaling personalized education when support teams are stretched thin, especially during peak sales periods.

Katherine Goodman, Senior Director of Customer Experience, and Stacy Williams, Senior Customer Experience Manager, explain how implementing Gorgias's AI Shopping Assistant transformed their customer education strategy into a conversion powerhouse.

In our second AI in CX episode, we dive into how Cornbread achieved a 30% conversion rate during BFCM, saving their CX team over four days of manual work.

Before diving into tactics, understanding why education matters in the wellness space helps contextualize this approach.

Katherine, Senior Director of Customer Experience at Cornbread Hemp, explains:

"Wellness is a very saturated market right now. Getting to the nitty-gritty and getting to the bottom of what our product actually does for people, making sure they're educated on the differences between products to feel comfortable with what they're putting in their body."

The most common pre-purchase questions Cornbread receives center around three areas: ingredients, dosages, and specific benefits. Customers want to know which product will help with their particular symptoms. They need reassurance that they're making the right choice.

What makes this challenging: These questions require nuanced, personalized responses that consider the customer's specific needs and concerns. Traditionally, this meant every customer had to speak with a human agent, creating a bottleneck that slowed conversions and overwhelmed support teams during peak periods.

Stacy, Senior Customer Experience Manager at Cornbread, identified the game-changing impact of Shopping Assistant:

"It's had a major impact, especially during non-operating hours. Shopping Assistant is able to answer questions when our CX agents aren't available, so it continues the customer order process."

A customer lands on your site at 11 PM, has questions about dosage or ingredients, and instead of abandoning their cart or waiting until morning for a response, they get immediate, accurate answers that move them toward purchase.

The real impact happens in how the tool anticipates customer needs. Cornbread uses suggested product questions that pop up as customers browse product pages. Stacy notes:

"Most of our Shopping Assistant engagement comes from those suggested product features. It almost anticipates what the customer is asking or needing to know."

Actionable takeaway: Don't wait for customers to ask questions. Surface the most common concerns proactively. When you anticipate hesitation and address it immediately, you remove friction from the buying journey.

One of the biggest myths about AI is that implementation is complicated. Stacy explains how Cornbread’s rollout was a straightforward three-step process: audit your knowledge base, flip the switch, then optimize.

"It was literally the flip of a switch and just making sure that our data and information in Gorgias was up to date and accurate."

Here's Cornbread’s three-phase approach:

Actionable takeaway: Block out time for that initial knowledge base audit. Then commit to regular check-ins because your business evolves, and your AI should evolve with it.

Read more: AI in CX Webinar Recap: Turning AI Implementation into Team Alignment

Here's something most brands miss: the way you write your knowledge base articles directly impacts conversion rates.

Before BFCM, Stacy reviewed all of Cornbread's Guidance and rephrased the language to make it easier for AI Agent to understand.

"The language in the Guidance had to be simple, concise, very straightforward so that Shopping Assistant could deliver that information without being confused or getting too complicated," Stacy explains. When your AI can quickly parse and deliver information, customers get faster, more accurate answers. And faster answers mean more conversions.

Katherine adds another crucial element: tone consistency.

"We treat AI as another team member. Making sure that the tone and the language that AI used were very similar to the tone and the language that our human agents use was crucial in creating and maintaining a customer relationship."

As a result, customers often don't realize they're talking to AI. Some even leave reviews saying they loved chatting with "Ally" (Cornbread's AI agent name), not realizing Ally isn't human.

Actionable takeaway: Review your knowledge base with fresh eyes. Can you simplify without losing meaning? Does it sound like your brand? Would a customer be satisfied with this interaction? If not, time for a rewrite.

Read more: How to Write Guidance with the “When, If, Then” Framework

The real test of any CX strategy is how it performs under pressure. For Cornbread, Black Friday Cyber Monday 2025 proved that their conversational commerce strategy wasn't just working, it was thriving.

Over the peak season, Cornbread saw:

Katherine breaks down what made the difference:

"Shopping Assistant popping up, answering those questions with the correct promo information helps customers get from point A to point B before the deal ends."

During high-stakes sales events, customers are in a hurry. They're comparing options, checking out competitors, and making quick decisions. If you can't answer their questions immediately, they're gone. Shopping Assistant kept customers engaged and moving toward purchase, even when human agents were swamped.

Actionable takeaway: Peak periods require a fail-safe CX strategy. The brands that win are the ones that prepare their AI tools in advance.

One of the most transformative impacts of conversational commerce goes beyond conversion rates. What your team can do with their newfound bandwidth matters just as much.

With AI handling straightforward inquiries, Cornbread's CX team has evolved into a strategic problem-solving team. They've expanded into social media support, provided real-time service during a retail pop-up, and have time for the high-value interactions that actually build customer relationships.

Katherine describes phone calls as their highest value touchpoint, where agents can build genuine relationships with customers. “We have an older demographic, especially with CBD. We received a lot of customer calls requesting orders and asking questions. And sometimes we end up just yapping,” Katherine shares. “I was yapping with a customer last week, and we'd been on the call for about 15 minutes. This really helps build those long-term relationships that keep customers coming back."

That's the kind of experience that builds loyalty, and becomes possible only when your team isn't stuck answering repetitive tickets.

Stacy adds that agents now focus on "higher-level tickets or customer issues that they need to resolve. AI handles straightforward things, and our agents now really are more engaged in more complicated, higher-level resolutions."

Actionable takeaway: Stop thinking about AI only as a cost-cutting tool and start seeing it as an impact multiplier. The goal is to free your team to work on conversations that actually move the needle on customer lifetime value.

Cornbread isn't resting on their BFCM success. They're already optimizing for January, traditionally the biggest month for wellness brands as customers commit to New Year's resolutions.

Their focus areas include optimizing their product quiz to provide better data to both AI and human agents, educating customers on realistic expectations with CBD use, and using Shopping Assistant to spotlight new products launching in Q1.

The brands winning at conversational commerce aren't the ones with the biggest budgets or the largest teams. They're the ones who understand that customer education drives conversions, and they've built systems to deliver that education at scale.

Cornbread Hemp's success comes down to three core principles: investing time upfront to train AI properly, maintaining consistent optimization, and treating AI as a team member that deserves the same attention to tone and quality as human agents.

As Katherine puts it:

"The more time that you put into training and optimizing AI, the less time you're going to have to babysit it later. Then, it's actually going to give your customers that really amazing experience."

Watch the replay of the whole conversation with Katherine and Stacy to learn how Gorgias’s Shopping Assistant helps them turn browsers into buyers.

{{lead-magnet-1}}

TL;DR:

Rising customer expectations, shoppers willing to pay a premium for convenience, and a growing lack of trust in social media channels to make purchase decisions are making it more challenging to turn a profit.

In this emerging era, AI’s role is becoming not only more pronounced, but a necessity for brands who want to stay ahead. Tools like Gorgias Shopping Assistant can help drive measurable revenue while reducing support costs.

For example, a brand that specializes in premium outdoor apparel implemented Shopping Assistant and saw a 2.25% uplift in GMV and 29% uplift in average order volume (AOV).

But how, among competing priorities and expenses, do you convince leadership to implement it? We’ll show you.

Shoppers want on-demand help in real time that’s personalized across devices.

Shopping Assistant recalls a shopper’s browsing history, like what they have clicked, viewed, and added to their cart. This allows it to make more relevant suggestions that feel personal to each customer.

The AI ecommerce tools market was valued at $7.25 billion in 2024 and is expected to reach $21.55 billion by 2030.

Your competitors are using conversational AI to support, sell, and retain. Shopping Assistant satisfies that need, providing upsells and recommendations rooted in real shopper behavior.

Conversational AI has real revenue implications, impacting customer retention, average order value (AOV), conversion rates, and gross market value (GMV).

For example, a leading nutrition brand saw a GMV uplift of over 1%, an increase in AOV of over 16%, and a chat conversion rate of over 15% after implementing Shopping Assistant.

Overall, Shopping Assistant drives higher engagement and more revenue per visitor, sometimes surpassing 50% and 20%, respectively.

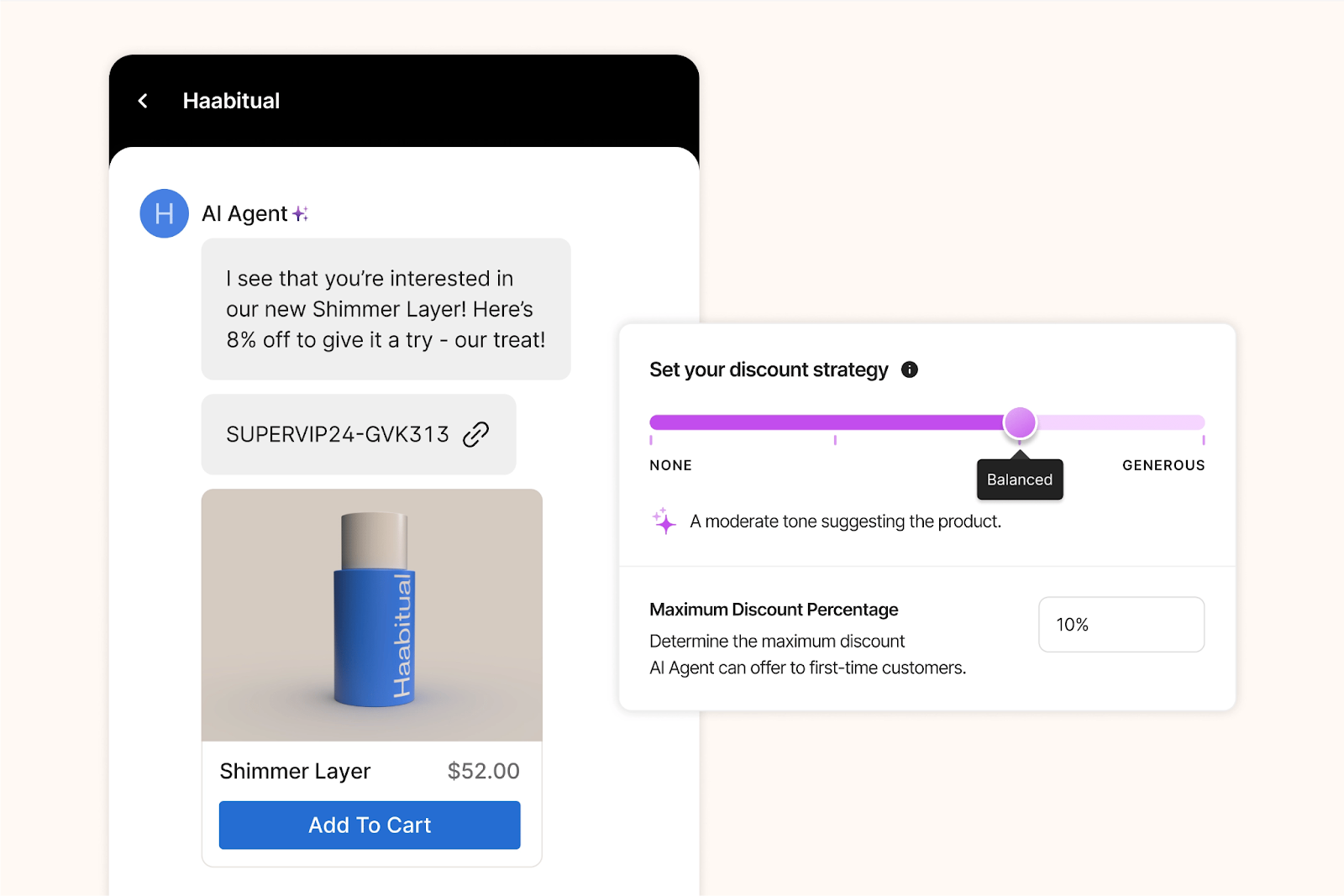

Shopping Assistant engages, personalizes, recommends, and converts. It provides proactive recommendations, smart upsells, dynamic discounts, and is highly personalized, all helping to guide shoppers to checkout.

After implementing Shopping Assistant, leading ecommerce brands saw real results:

Industry |

Primary Use Case |

GMV Uplift (%) |

AOV Uplift (%) |

Chat CVR (%) |

|---|---|---|---|---|

Home & interior decor 🖼️ |

Help shoppers coordinate furniture with existing pieces and color schemes. |

+1.17 |

+97.15 |

10.30 |

Outdoor apparel 🎿 |

In-depth explanations of technical features and confidence when purchasing premium, performance-driven products. |

+2.25 |

+29.41 |

6.88 |

Nutrition 🍎 |

Personalized guidance on supplement selection based on age, goals, and optimal timing. |

+1.09 |

+16.40 |

15.15 |

Health & wellness 💊 |

Comparing similar products and understanding functional differences to choose the best option. |

+1.08 |

+11.27 |

8.55 |

Home furnishings 🛋️ |

Help choose furniture sizes and styles appropriate for children and safety needs. |

+12.26 |

+10.19 |

1.12 |

Stuffed toys 🧸 |

Clear care instructions and support finding replacements after accidental product damage. |

+4.43 |

+9.87 |

3.62 |

Face & body care 💆♀️ |

Assistance finding the correct shade online, especially when previously purchased products are no longer available. |

+6.55 |

+1.02 |

5.29 |

Shopping Assistant drives uplift in chat conversion rate and makes successful upsell recommendations.

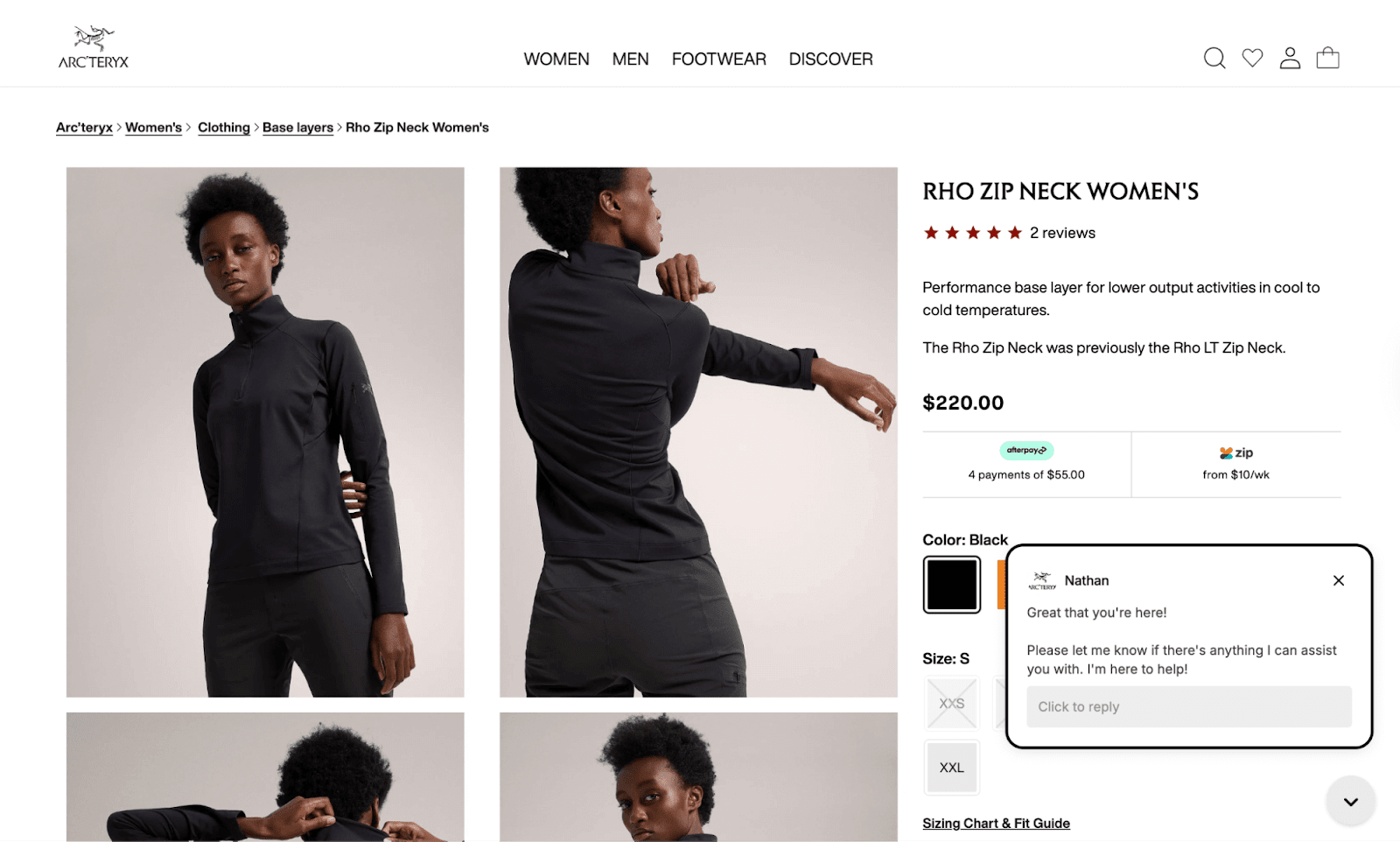

“It’s been awesome to see Shopping Assistant guide customers through our technical product range without any human input. It’s a much smoother journey for the shopper,” says Nathan Larner, Customer Experience Advisor for Arc’teryx.

For Arc’teryx, that smoother customer journey translated into sales. The brand saw a 75% increase in conversion rate (from 4% to 7%) and 3.7% of overall revenue influenced by Shopping Assistant.

Because it follows shoppers’ live journey during each session on your website, Shopping Assistant catches shoppers in the moment. It answers questions or concerns that might normally halt a purchase, gets strategic with discounting (based on rules you set), and upsells.

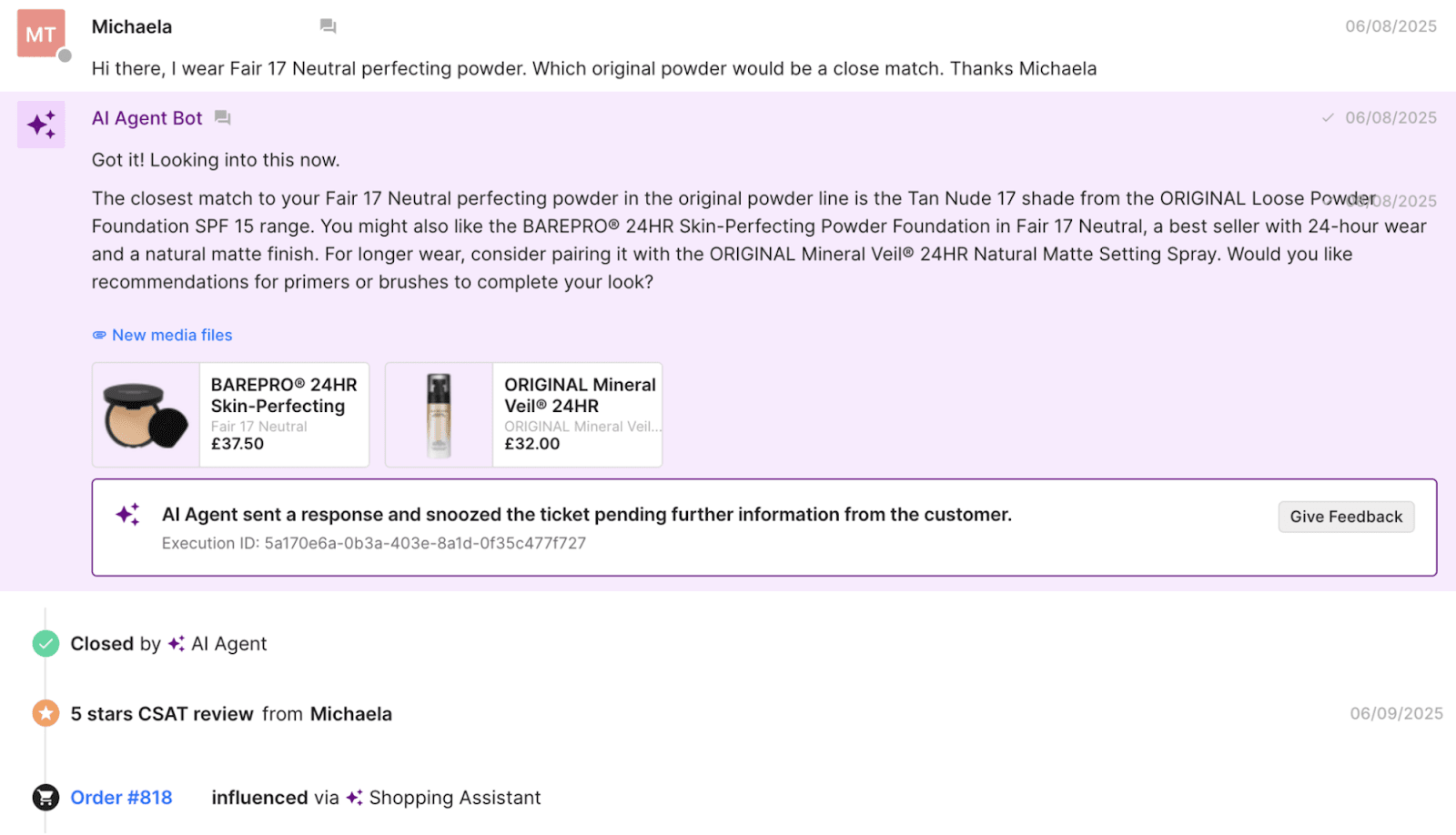

The overall ROI can be significant. For example, bareMinerals saw an 8.83x return on investment.

"The real-time Shopify integration was essential as we needed to ensure that product recommendations were relevant and displayed accurate inventory,” says Katia Komar, Sr. Manager of Ecommerce and Customer Service Operations, UK at bareMinerals.

“Avoiding customer frustration from out-of-stock recommendations was non-negotiable, especially in beauty, where shade availability is crucial to customer trust and satisfaction. This approach has led to increased CSAT on AI converted tickets."

Shopping Assistant can impact CSAT scores, response times, resolution rates, AOV, and GMV.

For Caitlyn Minimalist, those metrics were an 11.3% uplift in AOV, an 18% click through rate for product recommendations, and a 50% sales lift versus human-only chats.

"Shopping Assistant has become an intuitive extension of our team, offering product guidance that feels personal and intentional,” says Anthony Ponce, its Head of Customer Experience.

Support agents have limited time to assist customers as it is, so taking advantage of sales opportunities can be difficult. Shopping Assistant takes over that role, removing obstacles for purchase or clearing up the right choice among a stacked product catalog.

With a product that’s not yet mainstream in the US, TUSHY leverages Shopping Assistant for product education and clarification.

"Shopping Assistant has been a game-changer for our team, especially with the launch of our latest bidet models,” says Ren Fuller-Wasserman, Sr. Director of Customer Experience at TUSHY.

“Expanding our product catalog has given customers more choices than ever, which can overwhelm first-time buyers. Now, they’re increasingly looking to us for guidance on finding the right fit for their home and personal hygiene needs.”

The bidet brand saw 13x return on investment after implementation, a 15% increase in chat conversion rate, and a 2x higher conversion rate for AI conversations versus human ones.

Customer support metrics include:

Revenue metrics to track include:

Shopping Assistant connects to your ecommerce platform (like Shopify), and streamlines information between your helpdesk and order data. It’s also trained on your catalog and support history.

Allow your agents to focus on support and sell more by tackling questions that are getting in the way of sales.

{{lead-magnet-2}}

TL;DR:

While most ecommerce brands debate whether to implement AI support, customers already rate AI assistance nearly as highly as human support. The future isn't coming. It's being built in real-time by brands paying attention.

As a conversational commerce platform processing millions of support tickets across thousands of brands, we see what's working before it becomes common knowledge. Three major shifts are converging faster than most founders realize, and this article breaks down what's already happening rather than what might happen someday.

By the end of 2026, we predict that the performance gap between ecommerce brands won't be determined by who adopted AI first. It will be determined by who built the content foundation that makes AI actually work.

Right now, we're watching this split happen in real time. AI can only be as good as the knowledge base it draws from. When we analyze why AI escalates tickets to human agents, the pattern is unmistakable.

The five topics triggering the most AI escalations are:

These aren’t complicated questions — they're routine questions every ecommerce brand faces daily. Yet some brands automate these at 60%+ rates while others plateau at 20%. The difference isn't better AI. It's better documentation.

Take SuitShop, a formalwear brand that reached 30% automation with a lean CX team. Their Director of Customer Experience, Katy Eriks, treats AI like a team member who needs coaching, not a plug-and-play tool.

When Katy first turned on AI in August 2023, the results were underwhelming. So she paused during their slow season and rebuilt their Help Center from the ground up. "I went back to the tickets I had to answer myself, checked what people were searching in the Help Center, and filled in the gaps," she explained.

The brands achieving high automation rates share Katie's approach:

AI echoes whatever foundation you provide. Clear documentation becomes instant, accurate support. Vague policies become confused AI that defaults to human escalation.

Read more: Coach AI Agent in one hour a week: SuitShop’s guide

Two distinct groups will emerge next year. Brands that invest in documentation quality now will deliver consistently better experiences at lower costs. Those who try to deploy AI on top of messy operations will hit automation plateaus and rising support costs. Every brand will eventually have access to similar AI technology. The competitive advantage will belong to those who did the unexciting work first.

Something shifted in July 2025. Gorgias’s AI accuracy jumped significantly after the GPT-5 release. For the first time, CX teams stopped second-guessing every AI response. We watched brand confidence in AI-generated responses rise from 57% to 85% in just a few months.

What this means in practice is that AI now outperforms human agents:

For the first time, AI isn't just faster than humans. It's more consistent, more accurate, and even more empathetic at scale.

This isn't about replacing humans. It's about what becomes possible when you free your team from repetitive work. Customer expectations are being reset by whoever responds fastest and most completely, and the brands crossing this threshold first are creating a competitive moat.

At Gorgias, the most telling signal was AI CSAT on chat improved 40% faster than on email this year. In other words, customers are beginning to prefer AI for certain interactions because it's immediate and complete.

Within the next year, we expect the satisfaction gap to hit zero for transactional support. The question isn't whether AI can match humans. It's what you'll do with your human agents once it does.

The brands that have always known support should drive revenue will finally have the infrastructure to make it happen on a bigger scale. AI removes the constraint that's held this strategy back: human bandwidth.

Most ecommerce leaders already understand that support conversations are sales opportunities. Product questions, sizing concerns, and “just browsing” chats are all chances to recommend, upsell, and convert. The problem wasn't awareness but execution at volume.

We analyzed revenue impact across brands using AI-powered product recommendations in support conversations. The results speak for themselves:

It's clear that conversations that weave in product recommendations convert at higher rates and result in larger order values. It’s time to treat support conversations as active buying conversations.

If you're already training support teams on product knowledge and tracking revenue per conversation, keep doing exactly what you're doing. You've been ahead of the curve. Now AI gives you the infrastructure to scale those same practices without the cost increase.

If you've been treating support purely as a cost center, start measuring revenue influence now. Track which conversations lead to purchases, which agents naturally upsell, and where customers ask for product guidance.

We are now past the point where response time is a brand's key differentiator. It is now the use of conversational commerce or systems that share details and context across every touchpoint.

Today, a typical customer journey looks something like this: see product on Instagram, ask a question via DM, complete purchase on mobile, track order via email. At each step, customers expect you to remember everything from the last interaction.

The most successful ecommerce tech stacks treat the helpdesk as the foundation that connects everything else. When your support platform connects to your ecommerce platform, shipping providers, returns portal, and every customer communication channel, context flows automatically.

A modern integration approach looks like this. Your ecommerce platform (like Shopify) feeds order data into a helpdesk like Gorgias, which becomes the hub for all customer conversations across email, chat, SMS, and social DMs. From there, connections branch out to payment providers, shipping carriers, and marketing automation tools.

As Dr. Bronner’s Senior CX Manager noted, “While Salesforce needed heavy development, Gorgias connected to our entire stack with just a few clicks. Our team can now manage workflows without needing custom development — we save $100k/year by switching."

As new channels emerge, brands with flexible tech stacks will adapt quickly while those with static systems will need months of development work to support new touchpoints. The winners will be brands that invest in their tools before adding new channels, not after customer complaints force their hand.

Start auditing your current integrations now. Where does customer data get stuck? Which systems don’t connect to each other? These gaps are costing you more than you realize, and in the future, they'll be the key to scaling or staying stagnant.

Post-purchase support quality will be a stronger predictor of customer lifetime value than any email campaign. Brands that treat support as a retention investment rather than a cost center will outperform in repeat purchase rates.

Returns and exchanges are make-or-break moments for customer lifetime value. How you handle problems, delays, and disappointments determines whether customers come back or shop elsewhere next time. According to Narvar, 96% of customers say they won’t repurchase from a brand after a poor return experience.

What customers expect reflects this reality. They want proactive shipping updates without having to ask, one-click returns with instant label generation, and notifications about problems before they have to reach out. When something goes wrong, they expect you to tell them first, not make them track you down for answers.

The quality of your response when things go wrong matters more than getting everything right the first time. Exchange suggestions during the return flow can keep the sale alive, turning a potential loss into loyalty.

Brands that treat post-purchase as a retention strategy rather than a task to cross off will see much higher repeat purchase rates. Those still relying purely on email marketing for retention will wonder why their customer lifetime value plateaus.

Start measuring post-return CSAT scores and repeat purchase rates by support interaction quality. These metrics will tell you whether your post-purchase experience is building loyalty or quietly eroding it.

After absorbing these predictions about AI accuracy, content infrastructure, revenue-centric support, context, and post-purchase tactics, here's your roadmap for the next 24 months.

Now (in 90 days):

Next (in 6-12 months):

Watch (in 12-24 months):

The patterns we've shared, from AI crossing the accuracy threshold to documentation quality, are happening right now across thousands of brands. Over the next 24 months, teams will be separated by operational maturity.

Book a demo to see how leading brands are already there.

{{lead-magnet-2}}

As we all locked down in March 2020 and changed our shopping habits, many brick-and-mortar retailers started their first online storefronts.

Gorgias has benefitted from the resulting ecommerce growth over the past two years, and we have grown the team to accommodate these trends. From 30 employees at the start of 2020, we are now more than 200 on our journey to delivering better customer service.

Our engineering team contributed to much of this hiring, which created some challenges and growing pains. What worked at the beginning with our team of three did not hold up when the team grew to 20 people. And the systems that scaled the team to 20 needed updates to support a team of 50. To continue to grow, we needed to build something more sustainable.

Continuous deployment — and the changes required to support it — presented a major opportunity for reaching toward the scale we aspired to. In this article I’ll explore how we automated and streamlined our process to make our developers’ lives easier and empower faster iteration.

Throughout the last two years of accelerated growth, we’ve identified a few things that we could do to better support our team expansion.

Before optimizing the feature release process, here’s how things went for our earlier, smaller team when deploying new additions:

This wasn’t perfect, but it was an effective solution for a small team. However, the accelerated growth in the engineering team led to a sharp increase in the number of projects and also collaborators on each project. We began to notice several points of friction:

It was clear that things needed to change.

On the Site Reliability Engineering (SRE) team, we are fans of the GitOps approach, where Git is the single source of truth. So when the previously mentioned points of friction became more critical, we felt that all the tooling involved in GitOps practices could help us find practical solutions.

Additionally, these solutions would often rely on tooling we already had in place (like Kubernetes, or Helm for example).

GitOps is an operational framework. It takes application-development best practices and applies them to infrastructure automation.

The main takeaway is that in a GitOps setting, everything from code to infrastructure configuration is versioned in Git. It is then possible to create automation by leveraging the workflows associated with Git.

One such class of that automation could be “operations by pull requests”. In that case, pull requests and associated events could trigger various operations.

Here are some examples:

ArgoCD is a continuous deployment tool that relies on GitOps practices. It helps synchronize live environments and services to version-controlled declarative service definitions and configurations, which ArgoCD calls Applications.

In simpler terms, an Application resource tells ArgoCD to look at a Git repository and to make sure the deployed service’s configuration matches the one stored in Git.

The goal wasn’t to reinvent the wheel when implementing continuous deployment. We instead wanted to approach it in a progressive manner. This would help build developer buy-in, lay the groundwork for a smoother transition, and reduce the risk of breaking deploys. ArgoCD was an excellent step toward those goals, given how flexible it is with customizable Config Management Plugins (CMP).

ArgoCD can track a branch to keep everything up to date with the last commit, but can also make sure a particular revision is used. We decided to use the latter approach as an intermediate step, because we weren’t quite ready to deploy off the HEAD of our repositories.

The only difference from a pipeline perspective is that it now updates the tracked revision in ArgoCD instead of running our complex deployment scripts. ArgoCD has a Command Line Interface (CLI) that allows us to simply do that. Our deployment jobs only need to run the following command:

The developers’ workflow is left untouched at this point. Now comes the fun part.

Our biggest requirement for continuous deployment was to have some sort of safeguard in case things went wrong. No matter how much we trust our tests, it is always possible that a bug makes its way to our production environments.

Before implementing Argo Rollouts, we still kept an eye on the system to make sure everything was fine during deployment and took quick action when issues were discovered. But up to that point, this process was carried out manually.

It was time to automate that process, toward the goal of raising our team’s confidence levels when deploying new changes. By providing a safety net, of sorts, we could be sure that things would go according to plan without manually checking it all.

Argo Rollouts is a progressive delivery controller. It relies on a Kubernetes controller and set of custom resource definitions (CRD) to provide us with advanced deployment capabilities on top of the ones natively offered by Kubernetes. These include features like:

We were especially interested in the canary and canary analysis features. By shifting only a small portion of traffic to the new version of an application, we can limit the blast radius in case anything is wrong. Performing an analysis allows us to automatically, and periodically, check that our service’s new version is behaving as expected before promoting this canary.

Argo Rollouts is compatible with multiple metric providers including Datadog, which is the tool we use. This allows us to run a Datadog query (or multiple) every few minutes and compare the results with a threshold value we specify.

We can then configure Argo Rollouts to automatically take action, should the threshold(s) be exceeded too often during the analysis. In those cases, Argo Rollouts scales down the canary and scales the previous stable version of our software back to its initial number of replicas.

Each service has its own metrics to monitor, but for starters we added an error rate check for all of our services.

Remember when I mentioned replacing complex, project-specific deployment scripts with a single, simple command? That’s not entirely accurate, and requires some additional nuance for a full understanding.

Not only did we need to deploy software on different kinds of environments (staging and production), but also in multiple Kubernetes clusters per environment. For example, the applications composing the Gorgias core platform are deployed across multiple cloud regions all around the world.

ArgoCD and Argo Rollouts might seem to be magic tools, we actually still need some “glue” to make things stick together. Now because of ArgoCD’s application-based mechanisms, we were able to get rid of custom scripts and use this common tool across all projects. This in-house tool was named deployment conductor.

We even went a step further and implemented this tool in a way that accepts simple YAML configuration files. Such files allow us to declare various environments and clusters in which we want each individual project to be deployed.

When deploying a service to an environment, our tool will then go through all clusters listed for that environment.

For each of these, it will look for dedicated values.yaml files in the service’s chart’s directory. This allows developers to change a service’s configuration based on the environment and cluster in which it’s deployed. Typically, they would want to edit the number of replicas for each service depending on the geographical region.

This makes it much easier for developers than having to manage configuration and maintain deployment scripts.

This leads us to the end of our journey’s first leg: our first encounter with continuous deployment.

After we migrated all our Kubernetes Deployments to Argo Rollouts, we let our developers get acclimated for the next few weeks.

Our new setup still wasn’t fully optimized, but we felt like it was a big improvement compared to the previous one. And while we could think of many improvements to make things even more reliable before enabling continuous deployment, we decided to get feedback from the team during this period, to iterate more effectively.

Some projects introduced additional technicalities to overcome, but we easily identified a small first batch of projects where we could enable CD. Before deployment, we asked the development team if we were missing anything they needed to be comfortable with automatic deployment of their code in production environments.

With everyone feeling good about where we were at, we removed the manual step in our CI system (GitLab) for jobs deploying to production environments.

We’re still monitoring this closely, but so far we haven’t had any issues. We still plan on enabling continuous deployment on all our projects in the near future, but it will be a work in progress for now.

Here are some ideas for future improvements that anticipate potential roadblocks:

We’re excited to explore these challenges. And, overall, our developers have welcomed these changes with open arms. It helps that our systems have been successful at stopping bad deployments from creating big incidents so far.

While we haven’t reached the end of our journey yet, we are confident that we are on the right path, moving at the right pace for our team.

As you work with SQLAlchemy, over time, you might have a performance nightmare brewing in the background that you aren’t even aware of.

In this lesser-known issue, which strikes primarily in larger projects, normal usage leads to an ever-growing number of idle-in-transaction database connections. These open connections can kill the overall performance of the application.

While you can fix this issue down the line, when it begins to take a toll on your performance, it takes much less work to mitigate the problem from the start.

At Gorgias, we learned this lesson the hard way. After testing different approaches, we solved the problem by extending the high-level SQLAlchemy classes (namely sessions and transactions) with functionality that allows working with "live" DB (database) objects for limited periods of time, expunging them after they are no longer needed.

This analysis covers everything you need to know to close those unnecessary open DB connections and keep your application humming along.

Leading Python web frameworks such as Django come with an integrated ORM (object-relational mapping) that handles all database access, separating most of the low-level database concerns from the actual user code. The developer can write their code focusing on the actual logic around models, rather than thinking of the DB engine, transaction management or isolation level.

While this scenario seems enticing, big frameworks like Django may not always be suitable for our projects. What happens if we want to build our own starting from a microframework (instead of a full-stack framework) and augment it only with the components that we need?

In Python, the extra packages we would use to build ourselves a full-fledged framework are fairly standard: They will most likely include Jinja2 for template rendering, Marshmallow for dealing with schemas and SQLAlchemy as ORM.

Not all projects are web applications (following a request-response pattern) and among web applications, most of them deal with background tasks that have nothing to do with requests or responses.

This is important to understand because in request-response paradigms, we usually open a DB transaction upon receiving a request and we close it when responding to it. This allows us to associate the number of concurrent DB transactions with the number of parallel HTTP requests handled. A transaction stays open for as long as a request is being processed, and that must happen relatively quickly — users don't appreciate long loading times.

Transactions opened and closed by background tasks are a totally different story: There's no clear and simple rule on how DB transactions are managed at a code level, there's no easy way to tell how long tasks (should) last, and there usually isn't any upper limit to the execution time.

This could lead to potentially long transaction times, during which the process effectively holds a DB connection open without actually using it for the majority of the time period. This state is known as an idle-in-transaction connection state and should be avoided as much as possible, because it blocks DB resources without actively using them.

To fully understand how database access transpires in a SQLAlchemy-based app, one needs to understand the layers responsible for the execution.

At the highest level, we code our DB interaction using high-level SQLAlchemy queries on our defined models. The query is then transformed into one or more SQL statements by SQLAlchemy's ORM which is passed on to a database engine (driver) through a common Python DB API defined by PEP-249. (PEP-249 is a Python Enhancement Proposal dedicated to standardizing Python DB server access.) The database engine communicates with the actual database server.

At first glance, everything looks good in this stack. However there's one tiny problem: The DB API (defined by PEP-249) does not provide an explicit way of managing transactions. In fact, it mandates the use of a default transaction regardless of the operations you're executing, so even the simplest select will open a transaction if none are open on the current connection.

SQLAlchemy builds on top of PEP-249, doing its best to stay out of driver implementation details. That way, any Python DB driver claiming PEP-249 compatibility could work well with it.

While this is generally a good idea, SQLAlchemy has no choice but to inherit the limitations and design choices made at the PEP-249 level. More precisely (and importantly), it will automatically open a transaction for you upon the very first query, regardless whether it’s needed. And that's the root of the issue we set out to solve: In production, you'll probably end up with a lot of unwanted transactions, locking up on DB resources for longer than desired.

Also, SQLAlchemy uses sessions (in-memory caches of models) that rely on transactions. And the whole SQLAlchemy world is built around sessions. While you could technically ditch them to avoid the idle-in-transactions problem with a “lower-level” interface to the DB, all of the examples and documentation you’ll find online uses the “higher-level” interface (i.e. sessions). It’s likely that you will feel like you are trying to swim against the tide to get that workaround up and running.

Some DB servers, most notably Postgres, default to an autocommit mode. This mode implies atomicity at the SQL statement level — something developers are likely to expect. But they prefer to explicitly open a transaction block when needed and operate outside of one by default.

If you're reading this, you have probably already Googled for "sqlalchemy autocommit" and may have found their official documentation on the (now deprecated) autocommit mode. Unfortunately this functionality is a "soft" autocommit and is implemented purely in SQLAlchemy, on top of the PEP-249 driver; it doesn't have anything to do with DB's native autocommit mode.

This version works by simply committing the opened transaction as soon as SQLAlchemy detects an SQL statement that modifies data. Unfortunately, that doesn't fix our problem; the pointless, underlying DB transaction opened by non-modifying queries still remains open.

When using Postgres, we could in theory play with the new AUTOCOMMIT isolation level option introduced in psycopg2 to make use of the DB-level autocommit mode. However this is far from ideal as it would require hooking into SQLAlchemy's transaction management and adjusting the isolation level each time as needed. Additionally, "autocommit" isn't really an isolation level and it’s not desirable to change the connection's isolation level all the time, from various parts of the code. You can find more details on this matter, along with a possible implementation of this idea in Carl Meyer's article “PostgreSQL Transactions and SQLAlchemy.”

At Gorgias, we always prefer explicit solutions to implicit assumptions. By including all details, even common ones that most developers would assume by default, we can be more clear and leave less guesswork later on. This is why we didn't want to hack together a solution behind the scenes, just to get rid of our idle-in-transactions problem. We decided to dig deeper and come up with a proper, explicit, and (almost) hack-free method to fix it.

The following chart shows the profile of an idle-in-transaction case over a period of two weeks, before and after fixing the problem.

As you can see, we’re talking about tens of seconds during which connections are being held in an unusable state. In the context of a user waiting for a page to load, that is an excruciatingly long period of time.

SQLAlchemy works with sessions that are, simply put, in-memory caches of model instances. The code behind these sessions is quite complex, but usage boils down to either explicit session reference...

...or implicit usage.

Both of these approaches will ensure a transaction is opened and will not close it until a later ***session.commit()***or session.rollback(). There's actually nothing wrong with calling session.commit() when you need to explicitly close a transaction that you know is opened and you’re done with using the DB, in that particular scope.

To address the idle-in-transaction problem generated by such a line, we must keep the code between the query and the commit relatively short and fast (i.e. avoid blocking calls or CPU-intensive operations).

It sounds simple enough, but what happens if we access an attribute of a DB model after session.commit()? It will open another transaction and leave it hanging, even though it might not need to hit the DB at all.

While we can't foresee what a developer will do with the DB object afterward, we can prevent usage that would hit the DB (and open a new transaction) by expunging it from the session. An expunged object will raise an exception if any unloaded (or expired) attributes are accessed. And that’s what we actually want here: to make it crash if misused, rather than leaving idle-in-transaction connections behind to block DB resources.

When working with multiple objects and complex queries, it’s easy to overlook the necessary expunging of those objects. It only takes one un-expunged object to trigger the idle-in-transaction problem, so you need to be consistent.

Objects can't be used for any kind of DB interaction after being expunged. So how do we make it clear and obvious that certain objects are to be used in within a limited scope? The answer is a Python context manager to handle SQLAlchemy transactions and connections. Not only does it allow us to visually limit object usage to a block, but it will also ensure everything is prepared for us and cleaned up afterwards.

The construct above normally opens a transaction block associated to a new SQLAlchemy session, but we've added a new expunge keyword to the begin method, instructing SQLAlchemy to automatically expunge objects associated with block's session (the tx.session). To get this kind of behavior from a session, we need to override the begin method (and friends) in a subclass of SQLAlchemy's Session.

We want to keep the default behavior and use a new ExpungingTransaction instead of SQLAlchemy's SessionTransaction, but only when explicitly instructed to by the expunge=True argument.

You can use the class_ argument of sessionmaker to instruct it to build am ExpungingSession instead of a regular Session.

The last piece of the puzzle is the ExpungingTransaction code, which is responsible for two important things: committing the session so the underlying transaction gets closed and expunging objects so that we don't accidentally reopen the transaction.

By following these steps, you get a useful context manager that forces you to group your DB interaction into a block and notifies you if you mistakenly use (unloaded) objects outside of it.

What if we really need to access DB models outside of an expunging context?

Simply passing models to functions as arguments helps in achieving a great goal: the decoupling of models retrieval from their actual usage. However, such functions are no longer in control of what happens to those models afterwards

We don't want to forbid all usage of models outside of this context, but we need to somehow inform the user that the model object comes “as is,” with whatever loaded attributes it has. It's disconnected from the DB and shouldn't be modified.

In SQLAlchemy, when we modify a live model object, we expect the change to be pushed to the DB as soon as commit or flush is called on the owning session. With expunged objects this is not the case, because they don't belong to a session. So how does the user of such an object know what to expect from a certain model object? The user needs to ensure that she:

To safely and explicitly pass along these kind of model objects, we introduced frozen objects. Frozen objects are basically proxies to expunged models that won't allow any modification.

To work with these frozen objects, we added a freeze method to our ExpungingSession:

So now our code would look something like this:

Now, what if we want to modify the object outside of this context, later on, (e.g. after a long-lasting HTTP request)? As our frozen object is completely disconnected from any session (and from the DB), we need to fetch a warm instance associated to it from the DB and make our changes to that instance. This is done by adding a helper fetch_warm_instance method to our session...

...and then our code that modifies the object would say something like this.

When the second context manager exits, it will call commit on tx.session, and changes to my_model will be committed to the DB right away.

We now have a way of safely dealing with models without generating idle-in-transaction problems, but the code quickly becomes a mess if we have to deal with relationships: We need to freeze them separately and pass them along as if they aren’t related. This could be overcome by telling the freeze method to freeze all related objects, recursively walking the relationships.

We'll have to make some adjustments to our frozen proxy class as well.

Now, we can fetch, freeze, and use frozen objects with any preloaded relationships.

While the code to access the DB with SQLAlchemy may look simple and straightforward, one should always pay close attention to transaction management and the subtleties that arise from the various layers of the persistence stack.

We learned this the hard way, when our services eventually started to exhaust the DB resources many years into development.

If you recently decided to use a software stack similar to ours, you should consider writing your DB access code in such a way that it avoids idle-in-transaction issues, even from the first days of your project. The problem may not be obvious at the beginning, but it becomes painfully apparent as you scale.

If your project is mature and has been in development for years, you should consider planning changes to your code to avoid or to minimize idle-in-transaction issues, while the situation is still under control. You can start writing new idle-in-transaction-proof code while planning to gradually update existing code, according to the capacity of your development team.

Like any major topic in your company, your compensation policy should reflect your organizational values.

At Gorgias, we created a compensation calculator that reflected ours, setting salaries across the organization based on 3 key principles:

Since the beginning, we applied the first two: Each of our employees was granted data-driven stock options that beat the market average.

However, we were challenged internally: Our team members asked how much they would make if they switched teams or if they got promoted.

This led to the implementation of our third key principle, as we shared the compensation calculator with everyone at Gorgias and beyond: See the calculator here.

This was not a small challenge. We’re sharing our process in hopes that we can help other companies arrive at equitable, transparent compensation practices.

First, let’s get back to how we built the tool. We had to decide which criteria we wanted to take into account. Based on research articles and benchmarks on what other companies did before, we decided that our compensation model would be based on 4 factors: position, level, location, and strategic orientation.

If we had to sum it up all briefly, our formula looks like this:

Average of Data (for the position at defined percentile & Level) x Location index

This is the job title someone has in the company. It looks simple, but it can be challenging to define! Even if the titles don’t really vary from one company to another, people might have different duties, deal with much bigger clients or have more technical responsibilities. Sometimes your job title or position doesn’t match the existing databases.

For some of these roles, when we thought that our team members were doing more than average in the market, we crossed some databases to get something closer to fairness.

To assess a level we defined specific criteria in our growth plan for each job position. It is, of course, linked to seniority, but that is not the primary factor. When we hire someone, we evaluate their skills using specific challenges and case studies during our interview processes.

Depending on the databases you’ll find beginner, intermediate, expert, which we represent as L1, L2, L3, etc.We decided to go with six levels from L1 to L6 for individual contributors and six levels in management from team lead to C-level executive.

Our location index is based on the cost of living in a specific city (we rely on Numbeo for instance) and on the average salary for a position we hire (we use Glassdoor). Some cities are better providers of specific talents. By combining them, we get a more accurate location index.

When we are missing data for a specific city, we use the nearest one where we have data available.

Our reference is San Francisco, where the location index equals 1, meaning it’s basically the most expensive city in terms of hiring. For others, we have an index that can vary from 0.29 (Belgrade, Serbia) to 0.56 (Paris, France) to 0.65 (Toronto, Canada) etc. We now have 50+ locations in our salary calculator — a necessary consideration for our quickly growing, global team of full-time employees and contractors.

We rely on our strategic orientation to select which percentile we want to use in our databases. When we started Gorgias we were using the 50th percentile. As we grew (and raised funds), we wanted to be 100% sure that we were hiring the best people to build the best possible company.

High quality talent can be expensive (but not as expensive as making the wrong hires)! Obviously, we can’t pay everyone at the top of the market and align with big players like Google, but we can do our best to get close.

Since having the best product is our priority we pay our engineering and product team at the 90th percentile, meaning their pay is in the top 10% of the industry. We pay other teams at the 60th percentile.

Some other companies take into account additional criteria, such as company seniority. We believe seniority should reflect in equity, rather than in salary. If you apply seniority in the company index on salaries, eventually some of your team members will be inconsistent with the market. Those employees may stay in your company only because they won’t be able to find the same salary elsewhere.

Data is at the heart of our company DNA.

Where should you find your data? Data is everywhere! What matters most is the quality.

We look for the most relevant data on the market. If the database is not robust enough, we look elsewhere. So far we have managed to rely on several of them: Opencomp, Optionimpact, Figures.hr, and Pave are some major datasets we use for compensation. We’re curious and always looking for more. We’ll soon dig into Carta, Eon, and Levels. The more data we get, the more confident we are about the offers we make to our team.

Once we have the data, we apply our location index. It applies to both salaries and equity.

To build our equity package, we use the compensation and we then apply a “team” multiplier and a “level” multiplier. Those multipliers rely on data, of course. We’re using the same databases mentioned above and also on Rewarding Talent documentation for Europe.

As we mentioned above, once our tool was robust enough, we shared it internally.

To be honest, checking and checking again took longer than expected. But we all agreed that we’d rather release it to good reactions than rush it and create fear. We postponed the release for one month to check and double-check the results..

For the most effective release, we decided to do two things:

Overall, the reactions have been great. People loved the transparency and we got solid feedback.

We released the new calculator in September 2021, and overall we’re really happy with the response. We also had positive feedback from the update this month.

Let’s see how it goes with time.

Let’s be humble here: It’s only the beginning. It’s a Google Sheet. Of course, we’ll need to iterate on it.

In the meantime, you can check out the calculator here.

So far we’ve made plans to review the whole grid every year. However, now that it’s public within the teams, we can collect feedback and potentially make some changes. Everyone can add comments as they notice potential issues.

The next step for us is to share it online with everyone, on our website, so that candidates can have a vision of what we offer. We hope we’ll attract more talent thanks to this level of transparency and the value of our compensation packages.

I come from the world of physical retail where building a bond was more straightforward. We often celebrated wins with breakfast and champagne (yes, I’m French!) or by simply clapping our hands and making noise of joy.

We would also have lunch together every day, engaging in many informal discussions.

Of course, it bonded us! I knew my colleagues’ dog names and their plumber problems, and I felt really close to many of them.

Employee engagement is one of the primary drivers of productivity, work quality, and talent retention. When I joined Gorgias, where we have a globally distributed team, I wondered how you create the sense of belonging that drives that engagement

Like many companies now, our workforce is distributed. But at Gorgias, it’s a truly global affair: Our team lives in 17 countries, four continents, and many different time zones, which can be challenging.

And yet, I believe Gorgias culture is truly amazing and even better than the one I used to know.

I realize that we achieved that by relying on the critical ingredients of a strong relationship

By repeating these strong moments, you can make the connection between people stronger as well. The stronger the connection, the stronger the engagement.

Speaking of a strong engagement, Gorgias’s eNPS (employee Net Promoter Score) is 50. How is this possible? Well, what’s always quoted as one of our main strengths is the company culture, and how it connects our employees.

Let’s take it further by exploring five actionable steps we have taken to make that happen.

While some would push back against events like these falling under the purview of the People team, they are important for building strong culture, team cohesion, and employee happiness — all areas that are definitely part of our directive.

Here’s what you need to know to bring these summits to your organization.

As the name states, it’s a virtual event where the whole company connects.

It’s not mandatory, but it is highly recommended to attend because it’s fun and you learn many things.

It’s a mix of company updates, fun moments, and inspiring sessions. Each session is short, to let everyone the opportunity to breathe.

Typically we have three kinds of sessions:

Due to timezones, some sessions don’t include every country.

Our last virtual summit cost us roughly $13,000, which means $65 per head. Here’s the breakdown:

The first thing you might already have in mind is: It takes time! And you’re right.

The more we grow, the more challenging it becomes to organize these events.

I believe we’ll eventually need to have a dedicated event manager for all of our physical and virtual events. I want to have them within my team, and I 100% believe it’s worth it.

Another challenge can be technical difficulties with your event software choice, so make sure that you find a reliable platform that suits your needs.

Our team is a mix of hybrid and full-remote workers.

Since we don’t want the full-remote people to become disconnected, we highly encourage them to join the nearest hub once a quarter.

And when they do, we organize some happy hours, games or movie nights. Those face-to-face activities help create bonds between employees. It’s simple and doesn’t require a lot of organization, but it creates an incredible moment every time the remote teams join. We call them Gorgias Weeks.

We were fortunate to be able to organize our company offsite and gather a massive part of the crew together in October 2021.

The pandemic created doubt and additional points of stress, but looking back I’m so glad we were able to create an opportunity for everyone to meet in person.

We asked everyone to bring a health pass — full vaccination or PCR test — and we picked a location that allowed for a lot of outdoor activities.

We made sure the agenda for the two days was not too busy. As with our virtual summit, it was a balance of company alignment, learning, and fun. We made sure people had enough free time to relax, talk to each other, play games, or play sports.

This company offsite is surely an essential and strong moment for us and it helps create strong bonds and great memories.

We encourage every team to organize their own offsite for team-building purposes. Since people don’t meet a lot physically, having these once a year is great!

We let each team lead own it. They pick up the location and the agenda. Then, we provide guidelines with the budget.

Needless to say, it helps build stronger bonds and great memories.

In my experience, it was quite tough to create those moments internally with the team. That’s why we decided to start our team meeting with a fun activity of 10-15 minutes, where we are able to share more than just work.

Every week, there is a different meeting owner who has to come up with new fun activities and games. Starting the meeting with this kind of ice-breaking activity brings powerful energy, and people are more engaged and effective in the sessions. I would recommend it to everyone, especially to those who think, “We already have so many things to review in those weekly meetings, we don’t have time for that.” Try it once, you’ll see how the energy and productivity are different afterward.

On top of that, I also believe tools that encourage colleagues to randomly meet together are great. On our side we use Donut. It gives a weekly reminder that encourages employees to make it to their meeting with a colleague.

Overall, we’ve organized six virtual summits, four company retreats, three Gorgias weeks, and hundreds of virtual coffee and fun meetings.

At the beginning there were only 30 people in the company — now there are 200 of them. As I mentioned, it’s becoming more and more challenging to organize these meetups, but it’s also the most exciting part: making sure the next summit is better than the previous one!

Of course, I’m aware that employee fulfillment and connection are not the only ingredients for retention. But they are key ingredients and shouldn’t be forgotten, especially as we all become more remote.

It’s a worthy investment to organize these events and allocate resources to them, because it makes everyone at Gorgias feel included and connected. And I have no doubt, now, that it’s part of our responsibilities in People Ops.

When a customer's problem goes unanswered on Twitter, you lose that customer and possibly the audience of people who watched it happen.

It’s hard to come back from that, which is why customer care is so important on social media platforms. In fact, Shopify found 57% of North American consumers are less likely to buy if they can’t reach customer support in the channel of their choice.

Your customers want to talk to you — and you should want the same, before they head to a competitor. But first, you need to build a customer support presence on Twitter that lives up to your broader customer experience.

We've helped over 8,000 brands upgrade their customer support and seen the best and worst of social media interactions. Here are our top 10 battle-tested best practices for providing exceptional Twitter support.

Prompt response time is one of the most important pillars of great customer service, and according to data from a survey conducted by Twitter, 75% of customers on Twitter expect fast responses to their direct messages.

Of course, responding with accurate and helpful information is ultimately even more important than responding in real time, so be sure that you don't end up providing inaccurate information in a rush to reduce your response times.

Promptly and accurately responding to customer service issues that are sent to your company's Twitter account is often easier said than done. To do both, you need an efficient system and a well-trained customer support team.

This is where a helpdesk is critical, to bring your Twitter conversations into a central feed with all your other tickets.

If you’re trying to manage Twitter natively in a browser, or through copy-paste discussions with your social media manager, you’re not going to see the first-response times you need to succeed.

As data from Twitter's survey shows, speed is a necessity in order to meet customer expectations and provide a positive experience.

There may be instances where customers contact your Twitter support account via a mention in a tweet as opposed to a direct message. In fact, one in every four customers on Twitter will tweet publicly at brands in the hopes of getting a faster response according to data from Twitter. In these instances, it is important to move the conversation out of the public space as soon as possible by moving the conversation to the DMs.

There are a couple of reasons you would want to avoid resolving customer service issues on a public forum. For one, keeping customer service conversations private allows you to maintain better control over your brand voice and image since customer service conversations can often get a little messy and may not be something you want to broadcast to your entire audience.

Moving conversations out of the public space also enables you to collect more personal data from the customer such as their phone number or other contact information, details about their order and their credit card information without having to worry about privacy concerns.

In Gorgias, you can set up an auto-reply rule that responds to public support questions and directs them to send a DM for further help. This can ensure that people feel heard immediately, even if it takes a while for your team to get to their DM.

Regardless of whether you are discussing an issue with a customer via your Twitter account or any other medium, it is never a good idea for your reps to get into arguments with the customer.

Social media platforms such as Twitter tend to have a much more informal feel than other contact methods, and they also tend to sometimes bring out the worst in the people who hide behind the anonymity that they provide. You may end up finding that customers who contact you via Twitter are sometimes a little more argumentative than customers who contact you via more formal channels.

Nevertheless, it is essential for your Twitter support reps to maintain professionalism and avoid engaging in emotional arguments with customers. It may even help to establish guidelines for your team, to help deal with this type of customer tweet. You can include rules on emoji use, helpful quick-response scripts, and whatever other priorities you have.

Recommended reading: How to respond to angry customers

It is certainly possible to use Twitter alone when providing customer support via the platform. However, this isn't always the most efficient way to go about it.

Keep in mind that, like other social networks, Twitter wasn't necessarily designed to be a customer support channel. There aren't a lot of Twitter features beyond basic notifications that will be able to help your team organize support tickets.

Thankfully, there are third-party solutions that you can use that allow your support agents to respond to tweets and Twitter direct messages from your company website in a way that is much more organized and efficient. At Gorgias, for example, we offer a Twitter integration that will automatically create support tickets anytime someone mentions your brand, replies to your brand's tweets, or direct messages your brand. (By the way, we also offer integrations for Facebook Messenger and WhatsApp.)

Agents can then respond to these messages and mentions directly from the Gorgias platform, where they will show up in the same dashboard as the tickets from your other support channels.

This integration makes Twitter customer support far more efficient for your team and is one of the most effective ways to take your Twitter customer support services to the next level.

It is always important to respond to all questions and feedback that customers provide via Twitter, even if that feedback is negative. This is an important part of relationship marketing.